A world-first study has found that when asked a health-related question, the more evidence that is given to ChatGPT, the less reliable it becomes—reducing the accuracy of its responses to as low as 28%.

The study was recently presented at Empirical Methods in Natural Language Processing (EMNLP), a Natural Language Processing conference in the field. The findings are published in Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing.

As large language models (LLMs) like ChatGPT explode in popularity, they pose a potential risk to the growing number of people using online tools for key health information.

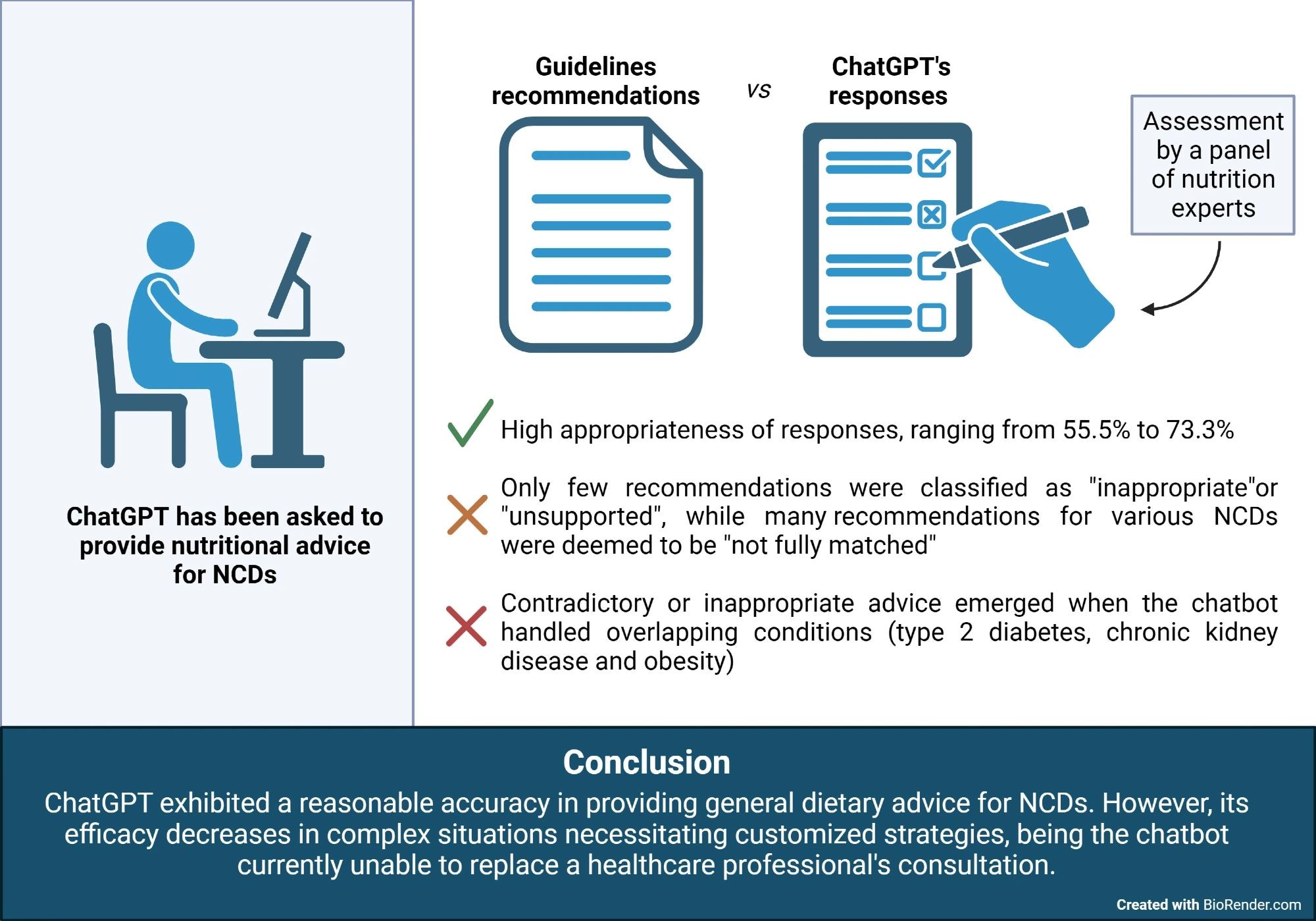

Scientists from CSIRO, Australia’s national science agency, and The University of Queensland (UQ) explored a hypothetical scenario of an average person (non-professional health consumer) asking ChatGPT if “X” treatment has a positive effect on condition “Y.”

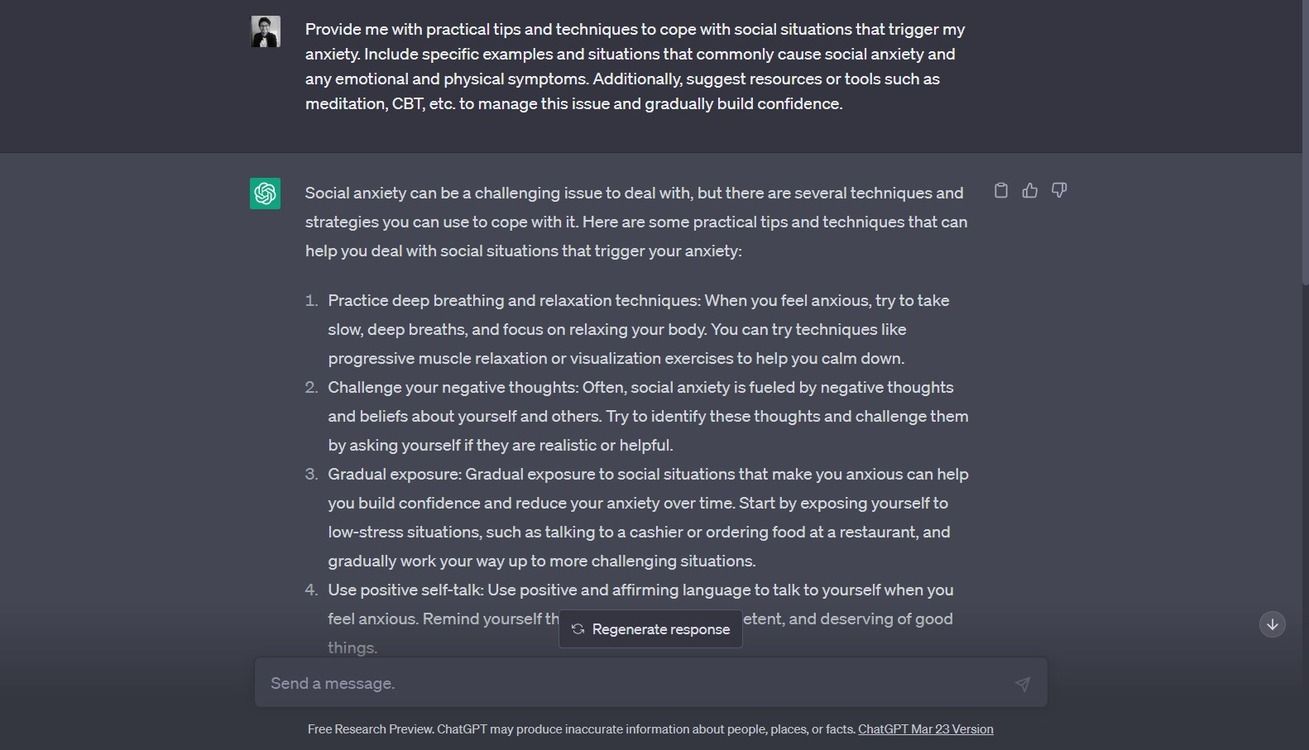

The 100 questions presented ranged from “Can zinc help treat the common cold?” to “Will drinking vinegar dissolve a stuck fish bone?”

ChatGPT’s response was compared to the known correct response, or “ground truth,” based on existing medical knowledge.

CSIRO Principal Research Scientist and Associate Professor at UQ Dr. Bevan Koopman said that even though the risks of searching for health information online are well documented, people continue to seek health information online, and increasingly via tools such as ChatGPT.

“The widespread popularity of using LLMs online for answers on people’s health is why we need continued research to inform the public about risks and to help them optimize the accuracy of their answers,” Dr. Koopman said. “While LLMs have the potential to greatly improve the way people access information, we need more research to understand where they are effective and where they are not.”

The study looked at two question formats. The first was a question only.